MetaLearner: A New Standard for AI Data Queries

Abstract:

Over the past two decades, the innovation in ERP systems has been minimal, leading to a cumbersome user experience requiring extensive training and reliance on skilled data professionals. However, the rise of Generative AI has introduced new methods, including Fine-tuning LLMs, Text2SQL, Retrieval-Augmented Generation (RAG), and Table-Augmented Generation (TAG), to improve data-driven decision-making by automating complex tasks. Among these, MetaLearner introduces a unique approach by building a proprietary data platform and fine-tuning AI models to interact effectively with it. This approach significantly reduces AI hallucination risks and enhances user experience.

MetaLearner demonstrates its superiority by achieving a 93.3% accuracy when tested against the TAG benchmark using the california_schools database. The platform outperforms existing solutions due to the business logic embedded in the AI and proprietary advanced search algorithms that synthesize SQL queries. Unlike competing methods that struggle with ambiguous data and unstructured databases, MetaLearner excels even in production environments with "dirty" tables, automating data retrieval and data engineering tasks.

MetaLearner’s ability to integrate business logic as CTEs and leverage reinforcement learning allows the AI to learn and improve over time. This positions MetaLearner as an essential tool for enterprises, enabling efficient decision-making and freeing users from manual data cleaning and query optimization tasks. With MetaLearner, organizations can confidently harness AI for data-driven decisions without the pitfalls of inaccurate outputs or hallucination risks.

1. Introduction

Over the past two decades, ERP systems have seen relatively little innovation to truly transform the user experience. To effectively use these systems, users must undergo months, if not years, of training to fully grasp how to maneuver them. Furthermore, the shortage of skilled data science professionals means that building an effective internal team is harder than ever [1]. To make matters worse, a majority of these highly skilled individuals’ time is spent on low-value tasks such as data retrieval and data cleaning [2]. Their most valuable time should be spent applying the analyses they have retrieved and contextualizing them within the organization to assist senior management in making data-driven decisions.

With the boom of Generative AI, many researchers have recognized this issue and have integrated Large Language Models (LLMs) to help address it [3]. Among the different approaches, we observe four mainstream methods to tackle this problem:

- Fine-tuning LLMs with Tabular Data

- Text-to-SQL

- Retrieval Augmented Generation (RAG)

- Table-Augmented Generation (TAG)

In the methods highlighted above, we will go through each one, outlining the strengths and key challenges. We will also reference the results for each method from the TAG paper [4]. Finally, we will introduce how MetaLearner tackles this problem without sacrificing the user experience while maintaining high efficiency and accuracy.

2. Finetuning LLMs with Tabular Data

The year 2024 marks a time when many companies, and even countries, are training their own LLMs [5]. Numerous organizations want to leverage the capabilities promised by LLMs, but concerns over data privacy and risks have been key obstacles. Despite the warnings, employees continue to paste proprietary company information into closed-source AI model services like ChatGPT, making the task of training their own LLMs more urgent [6]. The prospect of an AI that fully understands your business context and can assist in every business decision sounds like a no-brainer.

Fine-tuning LLMs to understand your domain and business context for daily conversations works well. However, overly extending their capabilities—such as encoding tabular data from databases into embeddings for the LLMs—can be disastrous. From a cost perspective, you will need to continuously fine-tune your LLMs as the data updates. From a performance perspective, at best, the LLMs lack the ability to perform simple database operations like summing or averaging, and at worst, the output can be severely plagued by hallucinations. Making company decisions based on such flawed outputs can be catastrophic without proper validation and checks, which will consume significant time and erode trust in the AI.

3. Text2SQL

Text2SQL is a technique in Natural Language Processing that converts a user’s natural language questions into SQL queries [7]. One of the most popular approaches in the current market is to convert the user’s question into SQL queries by utilizing LLMs.

Despite their popularity, the accuracy of these systems is often subpar, as their performance is affected by multiple factors, including the ambiguity of the user’s question, the complexity of SQL queries, and variability in database schemas. Additionally, one of the biggest challenges in Text2SQL is the inability to fit an entire database schema into the LLM’s context window. Even if the user manages to fit the schema into the context window, it becomes a "needle in a haystack" problem. For more information on the needle-in-a-haystack issue and an interesting debate on RAG, please refer to this Medium article: https://medium.com/@infiniflowai/llms-ability-to-find-needles-in-a-haystack-signifies-the-death-of-rag-0414d8818463.

In TAG’s paper, vanilla Text2SQL and Text2SQL augmented with sample rows yielded averages of 17% and 13% accuracy, respectively, against the benchmark dataset, making them undesirable in an enterprise context.

4. Retrieval Augmented Generation (RAG)

RAG is an emerging method to enhance the performance of LLMs by providing additional information that was not included during the training process, giving the LLM more context when answering a user’s question [8]. We have seen many individuals and companies vectorize the tabular data in their databases and retrieve the vectors through semantic search based on the user’s query during the answer generation process.

While RAG can be effective at retrieving semantically similar data for row-based queries, it has significant limitations when performing core database operations such as sums, averages, or counts, which are common tasks in database management. Additionally, filtering specific information does not work well, as semantic search may retrieve the very information the user wants to filter out.

In TAG’s paper, vanilla RAG and RAG with Retrieval + Reranking achieved average scores of 0% and 2%, respectively, against the benchmark dataset, making them unusable in an enterprise context.

5. Table Augmented Generation (TAG)

TAG is a key milestone in the open-source community for converting users' natural queries into data insights. TAG combines elements of Text2SQL and RAG, along with innovations in LLMs, to create a more efficient system for querying databases using natural language [4].

The core of TAG is its ability to leverage the semantic reasoning of LLMs to perform complex operations on tabular data. It comprises three key steps: synthesis, execution, and generation. With TAG, the team has harnessed AI to automate traditionally business-driven logic, aiming to accelerate the current cumbersome processes.

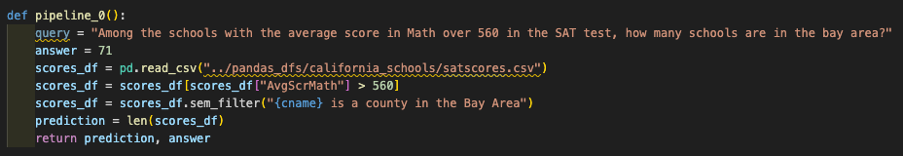

Figure 1. Sample question by the TAG Benchmark Pipeline

In the benchmark pipeline provided by the TAG team, they run each iteration of "County" through an AI (such as Llama 3.1) to retrieve the result of whether County X is part of the Bay Area. This example demonstrates how the team aims to offload business logic configuration to AI. This unlocks new possibilities, allowing users to query for information even if the required data, such as a column indicating which area a county is in, does not exist in the original database.

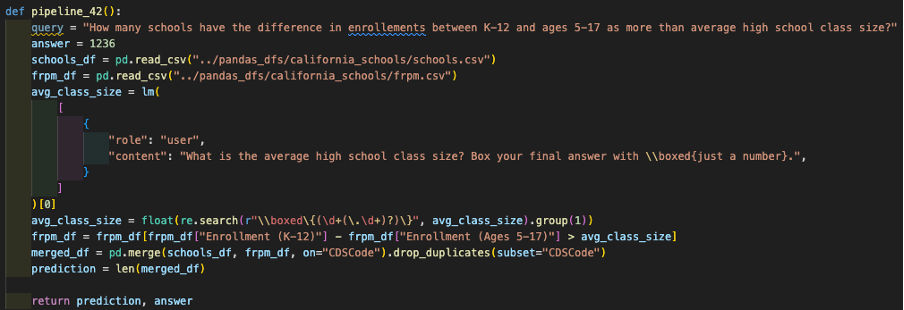

Figure 2. Sample Question by TAG Pipeline which AI Hallucination is a risk

Despite advancements in the algorithm to enhance user experience, risks such as AI hallucinations remain. For example, in this query, the AI is required to calculate the average high school class size before applying a filter. The AI assumes an average class size of 25, while the National Center for Education Statistics reports that the actual average classroom size is closer to 17 [9]. Additionally, TAG heavily relies on the cleanliness of data, requiring table names, column names, and raw data to be semantically understandable to articulate their purpose.

In TAG’s paper, TAG achieved an average accuracy of 55% against the benchmark dataset, making it the most promising approach in the open-source community.

The future looks bright for TAG despite the current risks and limitations. It offers users a new paradigm, allowing them to leverage AI to enhance their data experiences. To further improve the adoption of TAG, the team could provide a list of assumptions made by the AI for users to validate. This is particularly important for building confidence in AI's progression.

6. MetaLearner

At MetaLearner, our team has collectively accumulated decades of experience in data engineering and data science. We have developed a very different approach from the majority: we built a data platform and fine-tuned the AI to use the platform effectively. This allows us to minimize the risk of AI hallucinations while leveraging AI for a superior user experience.

We have trained our AI to navigate the california_schools database and grounded it with the necessary business logic to answer questions accurately. We tested it using the questions provided by the TAG team in their GitHub repository and achieved an impressive 93.3% accuracy.

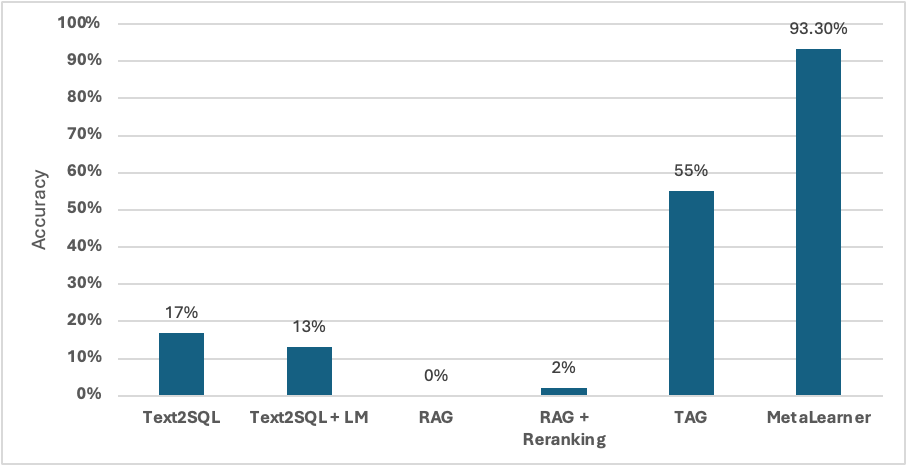

Figure 3. Methods Data Retrieval Accuracy Comparison

In benchmark comparisons, we observed that MetaLearner outperforms the existing methods highlighted in the TAG paper, primarily due to the business logic embedded in our AI and the proprietary search algorithms that help the AI synthesize the necessary SQL to retrieve data. The only question MetaLearner could not solve required the AI to infer a name's gender, which is challenging to encode in a SQL query. This is an area where TAG could be integrated to enhance MetaLearner’s existing algorithms by providing additional search capabilities.

To explore how MetaLearner handles the california_schools questions, please refer to the following Jupyter Notebook:

6.1 Embedding Business Logic as CTE

To get started, we taught the AI how to navigate the california_schools database and embedded key business knowledge, such as which counties make up the Bay Area (Alameda, Contra Costa, Marin, Napa, San Mateo, Santa Clara, Solano, Sonoma, and San Francisco) and that the average high school class size is 17. We also taught the AI that SoCal consists of counties such as Los Angeles, San Diego, Orange, Riverside, San Bernardino, Kern, Ventura, Santa Barbara, San Luis Obispo, and Imperial, among other business rules.

One of the proprietary techniques we used was embedding business logic as CTEs (Common Table Expressions), which has significantly improved the AI's recall rate compared to other methods. This highlights the importance of having data scientists build AI tools for data science rather than relying on AI scientists to build domain-specific tools. Many techniques and insights rely on the working experience accumulated by data scientists.

6.2 An AI That Learns and Improves Over Time

To ensure our clients make data-driven decisions free of hallucination, we have an onboarding process where users interact with our AI as it attempts to solve problems. Through reinforcement learning, MetaLearner picks up organization- and role-specific business logic. As usage increases, MetaLearner becomes better at solving users' queries, much like a new hire who improves over time. This approach frees users from having to craft perfect prompts, as expecting all users to be skilled in prompt engineering is impractical.

6.3 Tested in Production Data

Many current algorithms for data search expect a clean database with well-labeled semantics, but in production environments, this is rarely the case.

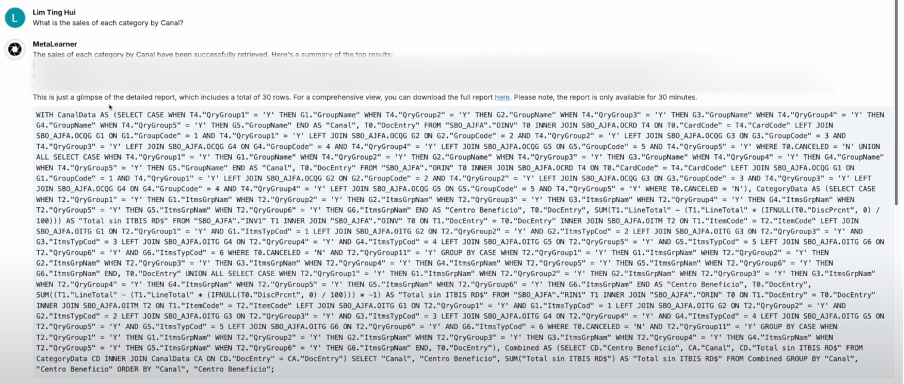

Figure 4. MetaLearner in Production Data

In Figure 4, MetaLearner is shown onboarded to a client with over 600 tables in their database. For a question as simple as "What are the sales of each category by channel?", the SQL generated by their data analysts and our AI is incredibly complex. Moreover, the table names are often ambiguous, like "OCQG" and "OINV," and column names such as "QryGroup1-5" provide little insight.

Using proprietary algorithms developed by MetaLearner, our AI can synthesize relationships across these "dirty" tables, enabling our system to retrieve the data relevant to the user’s query. MetaLearner automates mundane tasks like data retrieval and data engineering, which form the foundation of all data-driven decisions in areas like data science and business intelligence. With MetaLearner, users no longer need to invest significant time and resources in data cleaning and engineering, accelerating decision-making across the organization.

References

[1] “Future workforce report 2022,” Upwork, https://www.upwork.com/research/future-workforce-report-2022 (accessed Oct. 9, 2024).

[2] M. Goetz, J. Vale, G. Leganza, and E. Miller, “Data Performance Management is essential to prove data’s roi,” Forrester, https://www.forrester.com/report/Build-Trusted-Data-With-Data-Quality/RES83344 (accessed Oct. 9, 2024).

[3] X. Guo and Y. Chen, “Generative AI for Synthetic Data Generation: Methods, challenges and the future,” arXiv, https://arxiv.org/html/2403.04190v1#:~:text=The%20recent%20surge%20in%20research%20focused%20on%20generating (accessed Oct. 10, 2024).

[4] A. Biswal et al., “Text2SQL is Not Enough: Unifying AI and Databases with TAG,” arXiv, https://arxiv.org/html/2408.14717v1 (accessed Oct. 10, 2024).

[5] D. Armano, “LLM Inc.: Every business will have have their own large language model,” Forbes, https://www.forbes.com/sites/davidarmano/2023/09/20/llm-inc-every-business-will-have-have-their-own-large-language-model/ (accessed Oct. 11, 2024).

[6] C. Coles, “11% of data employees paste into CHATGPT is confidential,” Cyberhaven, https://www.cyberhaven.com/blog/4-2-of-workers-have-pasted-company-data-into-chatgpt (accessed Oct. 11, 2024).

[7] Z. Li et al., “PET-SQL: A Prompt-Enhanced Two-Round Refinement of Text-to-SQL with Cross-consistency,” arXiv, https://arxiv.org/pdf/2403.09732 (accessed Oct. 11, 2024).

[8] S. Ahmed, “What is retrieval-augmented generation(rag) in LLM and how it works?,” Medium, https://medium.com/@sahin.samia/what-is-retrieval-augmented-generation-rag-in-llm-and-how-it-works-a8c79e35a172 (accessed Oct. 11, 2024).

[9] “National teacher and principal survey (NTPS),” National Center for Education Statistics (NCES) Home Page, a part of the U.S. Department of Education, https://nces.ed.gov/surveys/ntps/estable/table/ntps/ntps2021_fl07_t1n (accessed Oct. 11, 2024).