MetaLearner's Automated Intelligence Stack

Introduction

In the world of Enterprise/B2B Software, we are unlocking a new era of possibilities in the “Automated Intelligence” space, where tasks that once required the constant attention of a human can now be automated through Artificial Intelligence. MetaLearner has been at the forefront of this movement, working to automate the entire end‑to‑end data lifecycle, from data retrieval to data cleaning to forecasting.

Initially, many enterprises claimed they could achieve this. Now, we’re seeing increasing skepticism, with some saying such automation is not possible. MetaLearner has proven otherwise. The automated data lifecycle is possible and has been in production since 2024. The key ingredients: a blend of culture, talent, and the perseverance to keep pushing innovation forward, even in the face of difficulties.

Our AI agents are built specifically to work with your company's ERP data, offering a level of customization and precision that generic solutions simply can’t match. Whether it’s querying datasets, generating insightful charts, or forecasting trends, MetaLearner agents excel because they are designed from the ground up to understand your enterprise data landscape. Here’s how each AI agent does it.

Technology Stack Overview

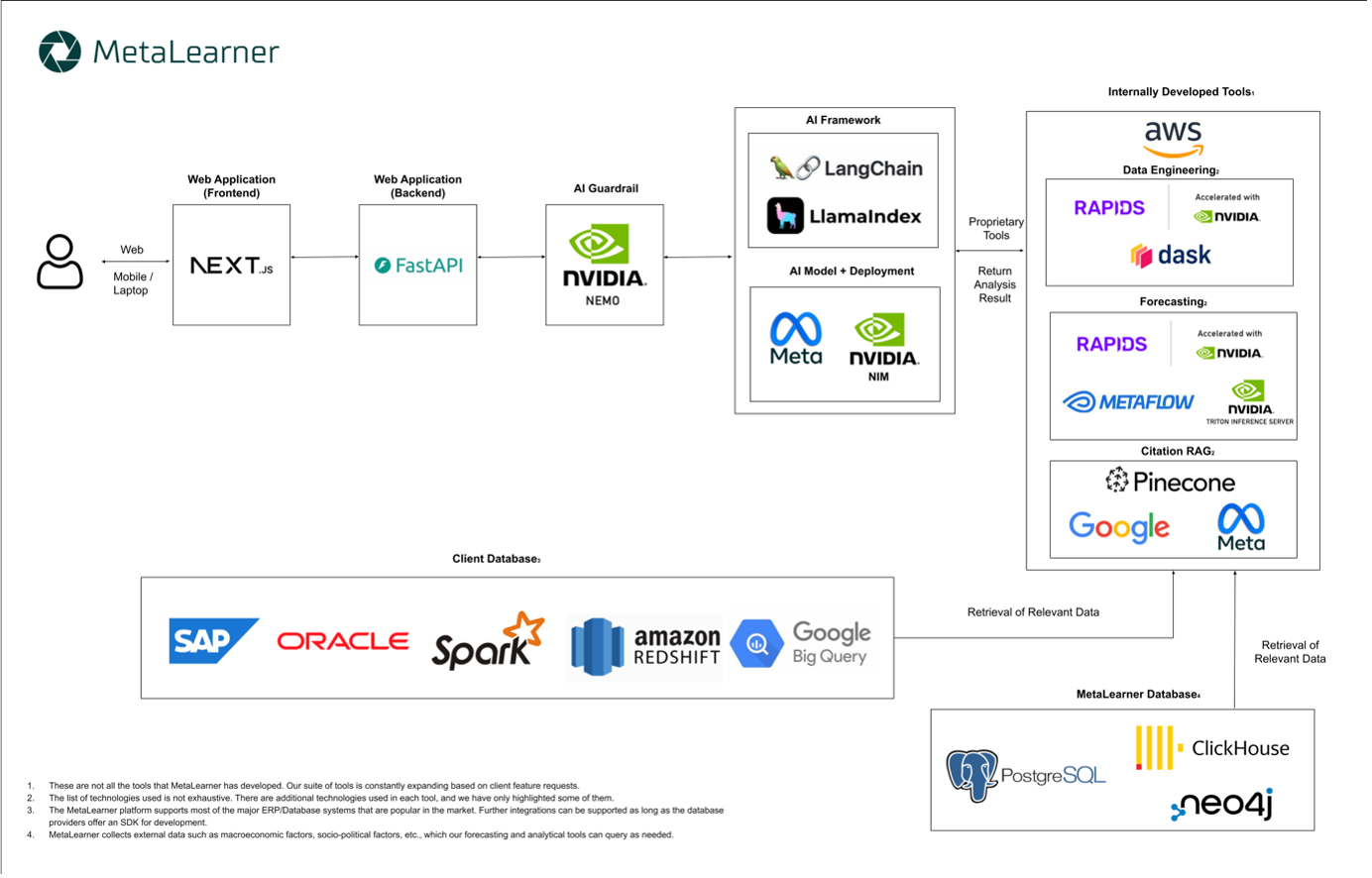

MetaLearner’s technology stack is illustrated in the software architecture diagram in Figure 1. The component labeled “Internally Developed Tools” refers to proprietary tools used by MetaLearner’s AI Agents to fulfill user requests. New database integrations can be supported within one week, provided the database offers an SDK for integration. Each LLM deployed by MetaLearner has been trained to learn how to operate the tools developed.

Figure 1. MetaLearner Software Architect

Database Agent

Data powers downstream value creation, such as forecasting and decision‑making. However, in the supply chain domain, many managers struggle to locate the data they need and understand the complex relationships between tables. To address this, MetaLearner has developed a proprietary method that dynamically adjusts the attention of our LLM (LLaMA 3.3 70B) based on user requests.

During onboarding (a 2–3 week process), we train the LLM to navigate the user’s database using its schema. The LLM learns to generate the correct SQL queries through a Reinforcement Learning with Human Feedback (RLHF) process. This ensures the model improves over time by learning how to write accurate queries when its initial attempts are incorrect.

Once data is retrieved, it is cleaned and analyzed by our AI Agent using a data engineering pipeline built on Polars with GPU acceleration. This pipeline identifies key outliers and ensures data is clean and analysis‑ready.

After training, the Database Agent is deployed for testing during the POC phase. User feedback during this phase fuels further learning, allowing the AI to refine its performance, an automated reinforcement loop. This process is akin to onboarding a new hire: the AI begins with limited business context but quickly adapts and incorporates learnings from each interaction.

External Data

MetaLearner’s Exogenous Agent handles all external data integrations. This data is used in two primary ways:

-

Improving Forecast Accuracy: External features, such as macroeconomic indicators, tourist arrivals, weather data, and domain‑specific datasets, are collected and validated by MetaLearner’s team. Only useful features are added to our central “Feature Space,” a data repository used by the Forecast Agent. This ensures relevant external variables are incorporated into forecasting pipelines without introducing unnecessary noise. Further benefits are outlined in the “Explainable Forecast” section.

-

Early Warning System: The Exogenous Agent continuously monitors public supply chain forums and news sources for potential disruptions. For relatively historically stable but impactful events, like tariff changes or black swan disruptions (e.g., shipping route closures), the agent identifies at‑risk customers. It then triggers the Database Agent to analyze customer‑specific impacts. For example, in the case of a tariff change, the Database Agent evaluates whether similar historical tariff deltas led to statistically significant changes in item performance. If an impact is detected, the Forecast Agent is invoked to adjust predictions. The adjustment scales the forecast mean toward the confidence interval’s upper or lower bounds while remaining within the CI. Similarly, for disruptions like shipping route closures, the Exogenous Agent queries the Database Agent for supplier and customer data. If disruption is validated for one customer, the analysis is extended to other customers with similar profiles.

Forecast Agent

MetaLearner’s Forecast Agent uses sales data from the Database Agent and enriches it with external data from the Exogenous Agent. While the forecasting pipeline architecture remains consistent, it is dynamically optimized for each customer and domain, as detailed in our whitepaper.

The process begins with feature elimination, typically at the month‑item granularity, most commonly used by our customers. The pipeline employs a hybrid of Recursive Feature Elimination with Cross Validation and Forward Feature Selection to balance computational cost and prediction accuracy. Granularity can be adjusted and is validated during onboarding.

We then train seven foundational time‑series and regression models in parallel. Each model undergoes hyperparameter optimization using Optuna, customized to the client’s desired granularity.

To combine model outputs, we use stacking, an ensemble technique where a meta‑model dynamically assigns weights to each base model based on validation performance. Additionally, we apply Conformal Quantile Regression to generate confidence intervals with optimal width and statistical coverage guarantees.

This sophisticated pipeline results in a hyper‑optimized, scalable forecasting solution tailored to each customer and each item. Each configuration, model type, feature set, and parameters, is uniquely tuned based on historical and validation data.

Explainable Forecast

A common challenge for supply chain managers is the inability to explain forecasts, as most tools rely on either overly simplistic statistical methods or black‑box models. MetaLearner addresses this by providing transparency into how each feature contributes to forecast changes.

Customer Example

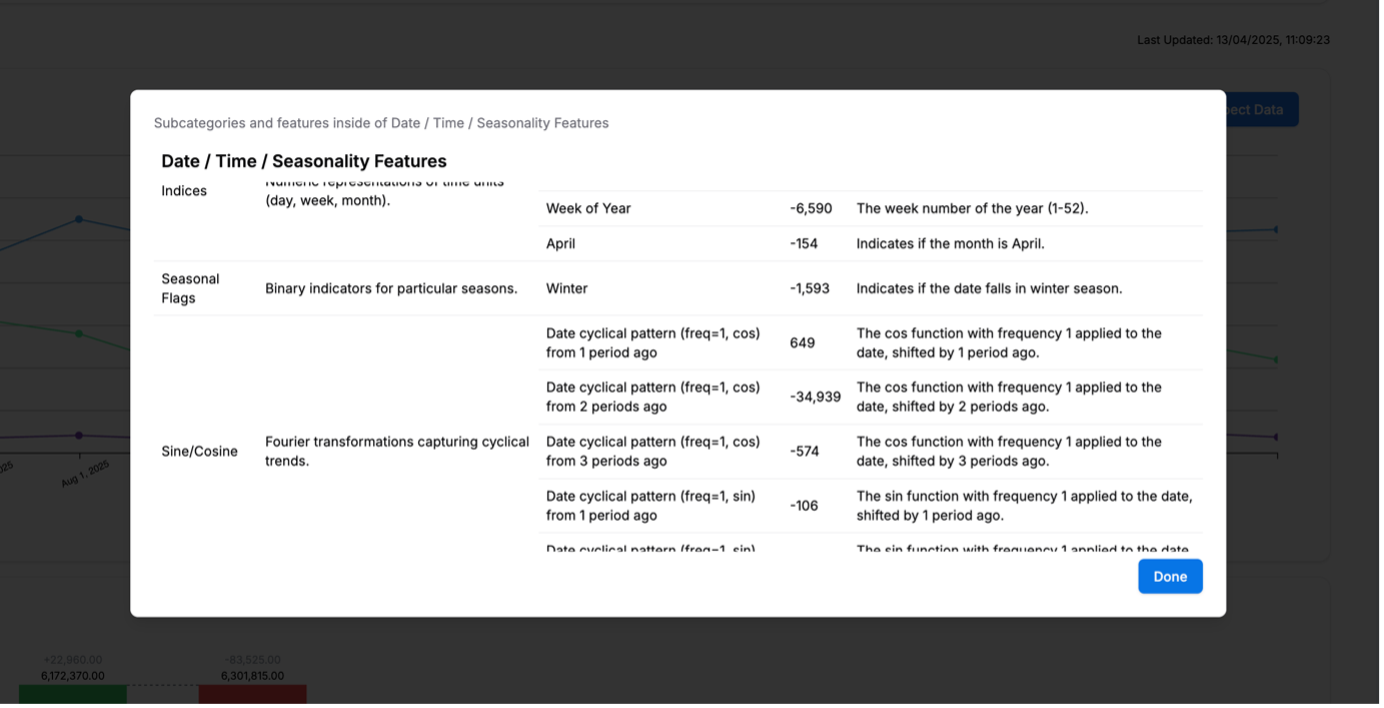

- In Figure 2, customer can see forecasted decreases of 154 units in April and 1,593 units in winter.

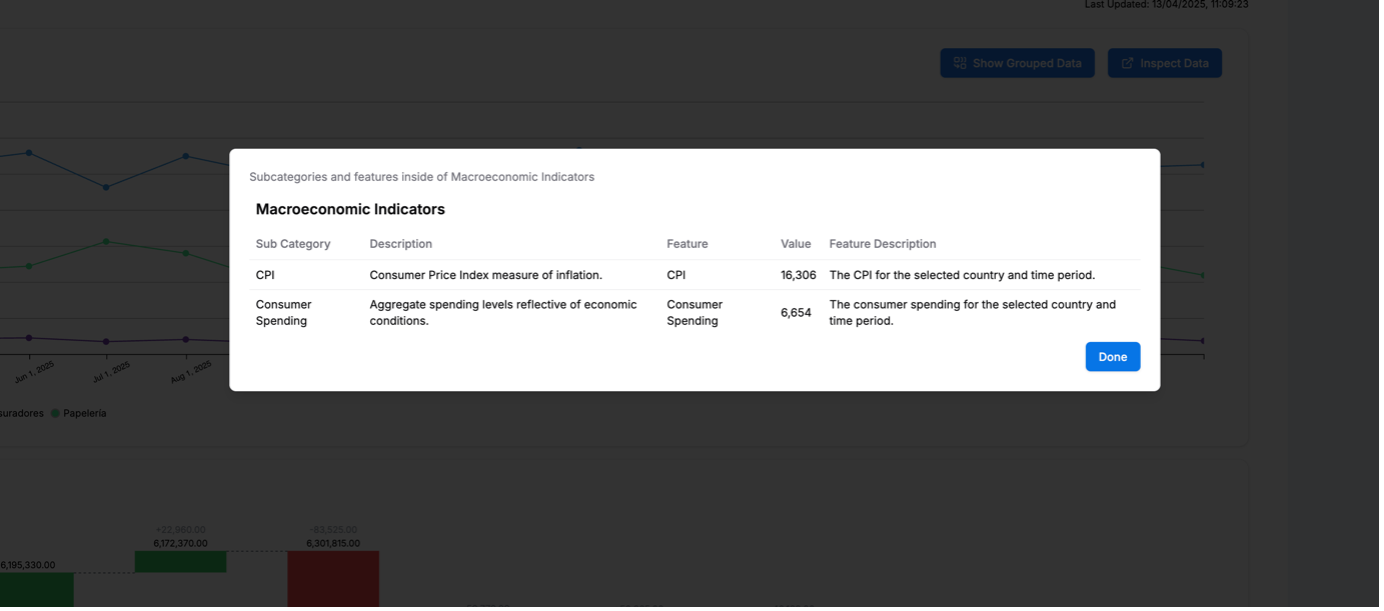

- Figure 3 shows that with current CPI levels, an increase of 16,306 units is expected.

These insights have proven extremely helpful for non‑technical users, allowing them to understand how external factors influence sales.

Figure 2. Date Time Seasonality Features for Customer

Figure 3. Macroeconomic Indicator Features for Customer

Operations Research

As global uncertainty rises, effective decision‑making becomes more critical. Traditional approaches, deterministic or stochastic optimization, fall short under such uncertainty:

- Deterministic optimization assumes perfect foresight.

- Stochastic optimization assumes knowledge of probability distributions, often unavailable and unreliable with recent distribution shifts.

MetaLearner instead uses robust optimization, which optimizes decisions under bounded uncertainty. This approach empowers users to evaluate scenarios and make resilient decisions.

Furthermore, traditional models often optimize a single objective (e.g., cost), ignoring the broader trade‑offs of real‑world supply chains. MetaLearner is incorporating a satisficing framework on top of robust optimization. This allows supply chain managers to define acceptable thresholds for multiple objectives, such as service level, inventory turnover, or cash flow. The system then searches for solutions that meet these targets, using forecasts and quantified uncertainties generated by MetaLearner, and relevant data retrieved by the Database Agent.